Efficient SQL queries are essential for maximizing the performance and responsiveness of queries built using LogicLoop. LogicLoop's integration of SQL allows you to interact with databases seamlessly, but writing efficient SQL is crucial for ensuring that your queries perform well. This document provides a comprehensive guide on how to write efficient SQL queries specifically tailored for the LogicLoop environment.

LogicLoop allows you to seamlessly automate your workflows using SQL queries. It's important to understand how SQL is utilized within LogicLoop's ecosystem to write efficient queries.

LogicLoop's execution environment may have unique characteristics such as workflow orchestration, integration with other services, and specific performance considerations. Understanding how LogicLoop executes SQL queries can help you tailor your SQL writing and optimization efforts.

Design a well-structured database schema that aligns with your application's requirements and query patterns.

Utilize indexing effectively by placing indexes on columns commonly used in WHERE clauses, JOIN conditions, and ORDER BY clauses.

Consider denormalization for read-heavy workloads, caching, or reporting to reduce JOIN operations and enhance query performance.

This not only saves time and resources but also reduces the risk of exposing sensitive data. Instead, be specific and only retrieve the necessary columns for your query. By doing this, you can also improve the performance of your application or system, especially when dealing with large datasets. Additionally, limiting the columns that you retrieve can also help in maintaining the readability and maintainability of your codebase in the long run.

It is recommended to use Common Table Expressions (CTEs) to filter data as much as possible before joining tables. CTEs can be thought of as temporary tables that are created on the fly and can be used in subsequent queries. By using CTEs, it is possible to break down complex queries into smaller, simpler components and improve performance by reducing the amount of data that needs to be processed.

CTEs can also be used to replace subqueries in some cases. By defining a CTE that filters the data in advance, subsequent queries can be simplified and made more efficient. This approach can be particularly effective when working with large datasets or complex queries.

When working with SQL, using appropriate data types is crucial for ensuring that your queries perform well. Selecting the right data type for each column in your database tables can help to minimize response time, optimize resource usage, and improve scalability.

There are many different data types available in SQL, including numeric data types (such as INT, DECIMAL, and FLOAT), character data types (such as CHAR and VARCHAR), date and time data types (such as DATE and TIMESTAMP), and more. When choosing a data type, it is important to consider the range of values that will be stored in the column, as well as the size of the data and the performance implications of using different data types.

For example, if you are storing small integers, you might use the TINYINT data type, which takes up less space than INT or BIGINT. If you are storing strings with a fixed length, you might use the CHAR data type, which is more efficient than VARCHAR for short strings. If you are storing dates and times, you might use the TIMESTAMP data type, which allows for precise calculations of time intervals.

In addition to selecting appropriate data types for your columns, you should also be mindful of how you use data types in your queries. For example, when comparing values of different data types, SQL will automatically convert one type to another as needed. However, this can be inefficient and can lead to unexpected results if the conversion is not done correctly. To avoid these issues, it is best to explicitly cast values to the appropriate data type before performing any comparisons or calculations.

When it comes to joining tables, it's important to be strategic. One thing to keep in mind is to avoid joining tables with the entire event tables or dimension tables. Instead, it's often more effective to focus on specific subsets of data. This can help ensure that your queries run efficiently and that you're able to get the insights you need without overwhelming your system. Additionally, by being selective about which tables you join, you can often identify patterns and relationships that might otherwise go unnoticed. So, while it's important to join tables when necessary, it's equally important to do so in a thoughtful and targeted way.

Indexes can significantly improve query performance by allowing the database to quickly find the data you need. When you create an index, the database creates a data structure that allows it to quickly find the rows that contain the data you are looking for. This can save a lot of time and resources when running queries. However, you should be careful not to over-index, as this can slow down insert and update operations. Creating too many indexes can also take up a lot of disk space, which can be a problem if you have limited storage capacity.

You can use INNER JOIN, LEFT JOIN, and RIGHT JOIN to combine data from multiple tables. Each join type has its own strengths and weaknesses, and the appropriate join type should be chosen based on the relationship between the tables and the data you need. INNER JOIN returns only the rows that have matching values in both tables, LEFT JOIN returns all the rows from the left table and the matching rows from the right table, and RIGHT JOIN returns all the rows from the right table and the matching rows from the left table. Be careful not to join too many tables, as this can slow down query performance.

Subqueries can be useful, but they can also be slow. A subquery is a query that is embedded within another query. In some cases, you can rewrite a subquery as a join to improve performance. When using subqueries, make sure they return a small amount of data. If a subquery returns a large amount of data, it can slow down query performance and use up a lot of resources.

When writing SQL queries, it is recommended to avoid using functions in JOINs. Instead, try using subqueries or CTE to calculate the values beforehand. This can help improve the performance of your queries and make them easier to read and maintain. Additionally, using subqueries or CTE allows you to reuse the calculated values in multiple parts of the query, instead of recalculating them each time they are needed.

These operators can improve query performance and make the code more readable. However, it is important to note that JOINs are not always bad and there are cases where they are necessary. When using EXISTS or IN, it is important to understand how they work and how they differ from JOINs. Additionally, it is recommended to use subqueries with EXISTS or IN instead of joining large tables, as this can further improve performance.

When writing SQL queries, it is often tempting to use the DISTINCT keyword to remove duplicate rows from the result set. However, it is important to note that using DISTINCT can come at a cost in terms of performance. Instead, consider using other methods to eliminate duplicates, such as grouping by the desired columns. This will not only improve performance, but also provide more flexibility in the types of queries that can be written. So, while it may be tempting to default to DISTINCT, it's worth taking the time to explore alternative solutions to achieve the same result.

String operations are commonly used in SQL queries. Here are some techniques you can use to optimize string operations in SQL:

LogicLoop allows you to schedule queries run from every few minutes to every few weeks, which means you only need to pull the latest data in your queries instead of the whole tables to trigger your actions. For example, if your query is scheduled to run every day, you will only need to pull the data from the previous day as below:

SELECT column1, column2FROM dim_events_tableWHERE ds = current_date - INTEGER '1'

The query above is written for Postgres database. You can find similar function in your own database SQL.

This can reduce the amount of data you need to use in your queries and eventually reduce your query run time significantly.

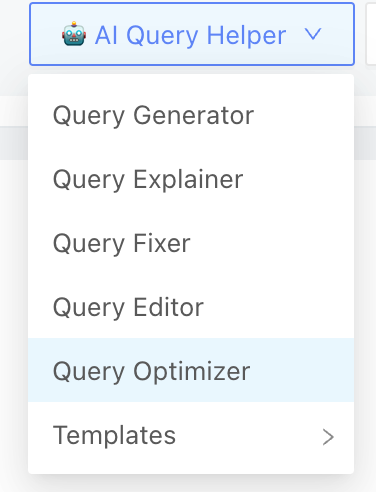

LogicLoop supports a set of AI SQL Helper features to improve your experience interacting with SQL. By using AI SQL Optimizer, you can take advantage of LogicLoop's machine learning capabilities to improve query performance and reduce manual optimization efforts. The AI SQL Optimizer analyzes your SQL queries and provides suggestions for optimizing them, which applies the SQL optimization strategy described above directly on your SQL queries. This can save time and effort while improving query performance.

Ask AI in LogicLoop allows you to ask follow-up questions to improve your SQL queries. It can provide suggestions to optimize your queries based on your specific use case and data. This can save you time and effort in optimizing your queries manually. Additionally, Ask AI can help identify patterns in your data and suggest changes to your database schema or indexing strategies to improve query performance.

LogicLoop also allows you to create alerts monitoring on your own queries schedule. This is an advanced feature. Feel free to contact support@logicloop.com to learn more.

Prioritize simplicity and clarity in your SQL queries to make them more maintainable and understandable.

Always use parameterized queries to prevent SQL injection and improve query plan reuse.

Stay updated with LogicLoop's SQL features, best practices, and performance optimization techniques to adapt to evolving requirements and technologies.

Efficient SQL queries are the foundation of high-performance applications in LogicLoop. By following the guidelines and best practices outlined in this guide, you can write SQL queries that not only execute quickly but also utilize system resources efficiently. Continuous monitoring, maintenance, and a commitment to ongoing learning are essential for maintaining optimal query performance as your application and database evolve.